At Carbon Five we install and use many different database engines. Document-oriented databases are proving to be a good fit for more and more of our projects. MongoDB is the most popular of these and provides a powerful set of tools to store and query data, but it’s been plagued by performance problems when used with very large databases or large cluster sizes. Riak is another interesting option that is built from the ground up to perform at scale. But Riak is difficult to set up and has a minimal API that requires a lot more work to manage the data. RethinkDB is a relative newcomer that wants to fill the gap between these.

The trade-off between developer friendliness and high performance is unavoidable, but I’ve been looking for something in the middle. RethinkDB claims to solve the 1-15 problem, which is a database that is reasonable to use as a single node, but can scale up to around 15 nodes with minimal configuration and no changes to the application. Whether or not this claim holds up remains to be seen. In this post I take it for a test drive and provide a qualitative assessment (i.e. no benchmarks) of its ease of use and effectiveness for application development. The question is, what do developers have to give up for the peace of mind of knowing they won’t have to rip out the persistence layer when the app gains popularity (tldr; not much).

Installation and setup

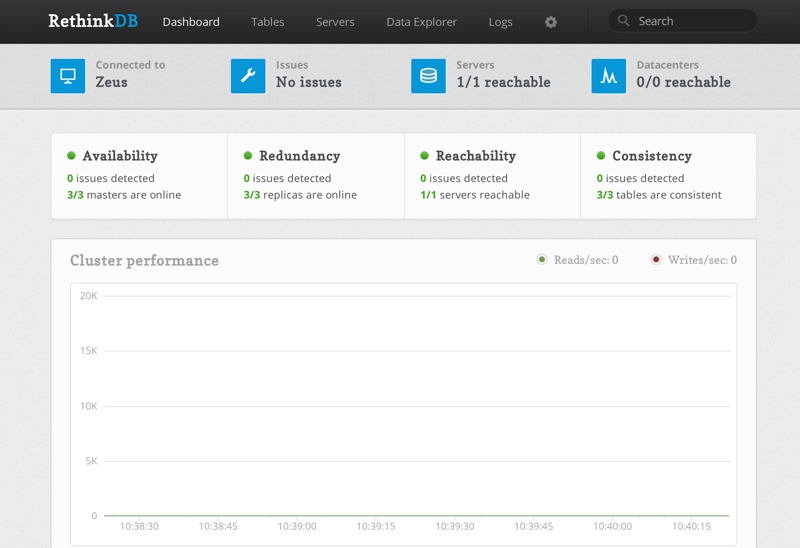

On a Mac, Homebrew makes installation so trivial, it’s not worth further discussion.  Once RethinkDB is running, you can immediately access its built-in administration web server with a web browser on port 8080. The administration pages are well designed and provide status information on all the servers. It also provides an interface to manage databases and tables, and a data explorer to interactively query the database. The data explorer is a great way to get started with RethinkDB’s query language, ReQL, because it provide autocomplete help for all the commands and a clean interface for viewing the results. As I was developing my test application, I would often go to the data explorer to try out queries before embedding them in code.

Once RethinkDB is running, you can immediately access its built-in administration web server with a web browser on port 8080. The administration pages are well designed and provide status information on all the servers. It also provides an interface to manage databases and tables, and a data explorer to interactively query the database. The data explorer is a great way to get started with RethinkDB’s query language, ReQL, because it provide autocomplete help for all the commands and a clean interface for viewing the results. As I was developing my test application, I would often go to the data explorer to try out queries before embedding them in code.

Once you’re ready to begin development, RethinkDB provides officially supported drivers as a JS npm package, Ruby gem, or Python pip package. Community supported drivers are also available for almost all languages in active use. The default configuration of RethinkDB lets your application connect without authentication. This means a fresh clone of an application running in development mode can run against a fresh install of RethinkDB, making things easier for everyone on the team. Unlike MongoDB’s collections, RethinkDB’s tables have to be explicitly created before you can begin inserting into them. You either need some sort of installation script or initialization code in the app to bootstrap new installs. This is only a small concern, since you usually need to add secondary indices and seed data anyway.

ReQL

RethinkDB took an interesting approach with its query language. Rather than specifying a DSL that would need to be represented as a string (like SQL) or object literal (like MongoDB), ReQL is a query specification API implemented in all the supported languages. You create ReQL queries in whatever language you’re using. Like the admin tools, ReQL has a sense of polish that suggests it was far more than an afterthought.

I’ll be providing all my examples in JS since it is the only RethinkDB language I’ve tried. Other languages will look similar, but the results will be returned rather than provided to a callback.

Inserts and Updates

Inserting data into RethinkDB is as easy as you would expect in a document-oriented database. A simple insert looks like this:

r.table("customers")

.insert(customer)

.run(conn, function(err, result) {

console.log(err, result);

})

Customer is an ordinary object to insert, and conn is a database connection with a default database set. Since this is a document database, the object can contain arbitrary amounts of nested data. The insert command can take either a single object or an array of objects for batch inserts.

Notice the function() . function() ... chain pattern. ReQL syntax is heavily influenced by functional programming concepts. The queries work a lot like Underscore.js, and it doesn’t hurt (much) to think of the query commands as operators acting on sequences of data.

The result from an insert is worth examining:

{

"unchanged": 0,

"skipped": 0,

"replaced": 0,

"inserted": 1,

"generated_keys": [

"7644aaf2-9928-4231-aa68-4e65e31bf219"

],

"errors": 0,

"deleted": 0

}

It provides stats on what actually happened in the database (in this case we inserted a new document without an error) and provides the generated primary key so you know how to retrieve the object later. If the customer object already contained an id field, it would be used as the primary key, and no generated_keys would be returned.

You can update documents by either replacing the whole document using the replace command or by altering some of the fields using the update command. In either case, you start by filtering a table down to the documents you want to alter, and then call replace or update to alter them. A replace looks like this:

r.table("customers")

.get("7644aaf2-9928-4231-aa68-4e65e31bf219")

.replace(newCustomer)

.run(...)

This first gets the old customer object and then replaces it with the new. If we had left out the replace command, this query would fetch the old object. With the replace, everything happens in the database. In general, ReQL queries operate entirely in the database and only retrieve the result from the last command in the chain. An update on multiple documents looks like this:

r.table("customers")

.filter({lastName:"Thompson"})

.update({

freeGames: r.row("freeGames").add(1)

})

.run(...)

Here we’re finding all customers with the last name ‘Thompson’ (I’ll discuss filters in more detail later) and updating just the ‘freeGames’ field by incrementing it. The syntax may look a little complicated, but it allows us to change the document atomically. That means that,if you and I both ran this query at the same time, we can expect all the ‘freeGames’ fields to be 2. That would not be the case if we had to retrieve the document, check existing value (0), both increment it (1) and then both replace the document (still 1). This is the real power of a document database over a simple key store.

RethinkDB provides a full suite of document manipulation commands, so if you want to, say, add an object to an array of objects contained within the ‘purchases’ field of a document, you can run the command:

r.table("customers")

.get(id)

.update({

purchases: r.row("purchases").append(newPurchase)

})

.run(...)

and it will also execute atomically.

Querying

ReQL is designed to support data retrieval, starting with basic fetches and filtered searches, on through reporting style queries, and into light data analysis (i.e. up to the point where Hadoop becomes the most reasonable option). We already have some idea what fetching looks like from the update syntax. Retrieving a document by primary key looks like this:

r.table(‘animals’).get(“7644aaf2-9928-4231-aa68-4e65e31bf219”).run(...)

The filter command has a few different styles. You can find documents that have fields equal to given parameters using the object literal style:

r.table('customers').filter({lastName:'Thompson'}).run(...)

You can also use the r.row() command to generate a filter condition on a property like so:

r.table('customers').filter(r.row('lastName').eq('Thompson')).run(...)

Here, the eq could be replaced with any of a number of comparison operations. Finally, you can write a third equivalent query using the function syntax:

r.table('customers')

.filter(function(customer) {

return customer('lastName').eq('Thompson')

})

.run(...)

This is an interesting style. At first glance, it looks like you’re providing a function to be run on the server. But customer is not a document in this case. It’s equivalent to r.row in the example above. The function is called immediately and returns a query specification. This is clever (maybe too clever?). As long as you understand what’s really going on here, you are free to pretend that the function really is operating on server data.

Complex Queries

MongoDB started with a two-pronged approach to querying: An object-literal syntax for fetching and filtering data, and a map-reduce implementation that ran JS functions on the server for more complex data analysis. When it became clear that map-reduce was too slow, MongoDB rolled out the excellent aggregation framework that provides powerful commands for transforming data in a collection. RethinkDB starts with lasers, 8 o’clock, day one. Document transformation and aggregation is built into ReQL.

Like most query languages. ReQL optimizes queries transparently (meaning you shouldn’t need to care how). The upside of this is that 95% of the time your queries will be efficient without you really trying. The downside is that it’s really hard to know when you’re in the other 5%. ReQL provides lots of commands for working with documents, and, as it’s all new to me, it’s hard to grasp exactly how powerful the language is without more experience (similarly, it took a long time to fully grasp MongoDB’s aggregation frameworks capability). My first impression, however, is that it is at least sufficiently powerful for application development and relatively easy to use.

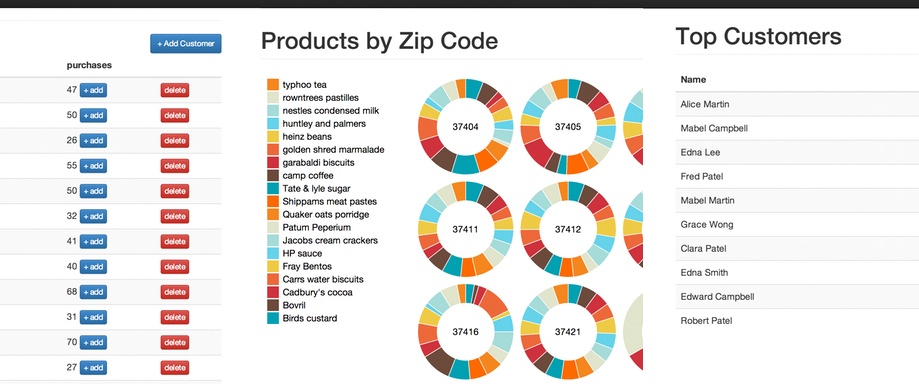

Say we have a collection of customer documents, each of which has the customer’s zip code and a list of purchases that contain a product name. Now say we want to run a report to figure out which products are selling in which zip codes (see this in action in the test project). We could run the query:

r.table('customers')

.hasFields('purchases')

.concatMap(function(customer) {

return customer('purchases').map(function(purchase) {

return {zip:customer('address')('zip'), product:purchase('name')};

});

})

.groupBy('zip','product', r.count)

.run(...)

Breaking this down:

- The

hasFields()command filters out customers with no purchases at all (this avoids an error down the line). - The

concatMap()command takes an array of objects returned by it’s argument and streams their elements out as a single sequence. - The

map()function in this case transforms each purchase in each row into a new document that contains the zip code from the customer’s address and the product name from the purchase. So, by the end of theconcatMap()we have one document for each purchase in each customer document that looks like{zip:12345, product:'Something'}. - The

groupBy()command finds all matching pairs of zip and product name and counts them. After thegroupBy()command, we have one document for each unique pair of zip and product name that contains the number of these pairs in areductionfield.

Although the syntax may take a little adjustment, the style of programming should be familiar to anyone who has used MongoDB aggregations or is comfortable with functional programming.

Joins

One of RethinkDB’s main marketing points has been its support for joins. It is the only document-oriented database I know of that does this. Joins are less important in document databases than in relational databases. Complex documents eliminate the need to scatter object data across multiple tables and then join it back together before entering the app. If you do decide you need to separate related data into multiple tables, it begs the question of why you would need to query the data from both tables simultaneously. There will be reasons, but there also will be cheap solutions that don’t involve joins.

Necessary or not, RethinkDB has joins. One place this give you significantly more power is when running reporting-style queries against multiple tables. MongoDB only supports map-reduce and aggregation against a single collection. RethinkDB lets you join two or more tables together before aggregating their data.

Let’s see how this works: Assume the same dataset as above. Additionally, assume we have a visits table with customer and hits fields that describe how many times a customer visits our website each day (again you can see this in action in the test project). Now say we want to find out how many times our top 10 customers hit our website (presumably because we’re very bored). The query looks like this:

r.table('customers')

.hasFields('purchases')

.map(function(customer) {

return {

id: customer('id'),

firstName: customer('firstName'),

lastName: customer('lastName'),

purchaseTotal: customer('purchases')('amount')

.reduce(function(acc,amount) {

return acc.add(amount);

}, 0)

}

})

.orderBy(r.desc('purchaseTotal')).limit(10)

.innerJoin(

r.table('visits').groupBy('customer', r.sum('hits')),

function( customer, visit ) {

return customer('id').eq(visit('group')('customer'));

}

)

.map({

id:r.row('left')('id'),

firstName:r.row('left')('firstName'),

lastName:r.row('left')('lastName'),

purchases:r.row('left')('purchaseTotal'),

hits:r.row('right')('reduction')

})

Once again we start with the entire table of customers and remove any that have no purchases. This time we map() each customer into a new document that contains only the id, firstName, lastName, and the total money spent in all purchases. We get the latter by creating a reduction over purchases that sums the amount fields. There may be a simpler way to sum over an array, but I haven’t found it. Once we have our streamlined customers, we order them by purchaseTotal and slice off the top 10. We then join this to another sequence created by grouping the visits table by customer and summing the hits. The second argument to the innerJoin() is a predicate that determines when the documents should be joined. Once we’ve joined our top customers to their respective total web visits, we map the documents to a simpler structure just because we can.

This query isn’t very fast. The innerJoin() commands starts with a cartesian product and filters out all the pairs that fail the predicate. It’s possible that the query could be rewritten to take advantage of eqJoin(), which uses indexes and primary keys, but it is not obvious how that would work for this query. Joins open up new possibilities for reporting, but making those queries fast enough for real time access will be a challenge.

Conclusion

I started this evaluation after hearing about a database with impressive-sounding administration, performance, and scaling features, and set out to find out whether it would be too painful to develop against. I found that working with RethinkDB is actually a pleasure. All of its developer-facing parts are well designed and, so far as I could tell, bug free. ReQL is an interesting and powerful query language. It will be a while before the RethinkDB team works out the technical kinks, people start using it at scale, the inevitable failure reports start rolling in, and once again the RethinkDB team works out the kinks. Until then, it’s way too early to recommend this database wholeheartedly. What I can say is that I’m looking forward to using it on future personal projects, and I’m excited to see what the future holds for RethinkDB.

Update

The original version of the top customers query used a potentially unsafe reduce function (see how map/reduce queries are executed). Thanks to Michel from RethinkDB for pointing this out.